Rise of the Underdog

Although it is more powerful than it has ever been, Wikipedia is reliant on third parties who are increasingly ingesting its facts and severing them from their source. To survive, Wikipedia needs to initiate a renewed campaign for the right to verifiability. This article appears in the book “Wikipedia@20: Stories of an Incomplete Revolution” edited by Joseph Reagle and Jackie Koerner and published by MIT Press. Find other chapters on pubpub or buy the book.

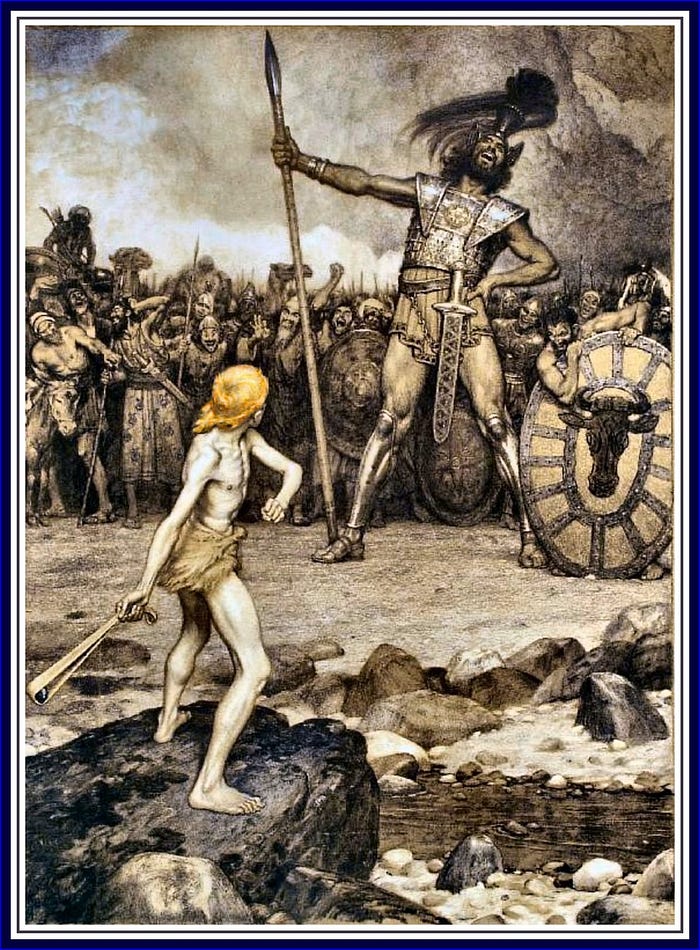

We all love an underdog. And when Nature announced that Wikipedia’s quality was almost as good as Encyclopæedia Britannica for articles about science in 2005, I celebrated. I celebrated because Wikipedia was the David to Big Media’s Goliath — the little guy, the people’s encyclopedia, the underdog who had succeeded against all odds.

Since then, Wikipedia has moved from the thirty-seventh most visited website in the world into the top ten. Wikipedia is now the top dog for facts as the world’s most powerful platforms use the website to power their search engines and virtual assistants. Those platforms extract information from Wikipedia articles to fuel the question-and-answer systems that drive search engines like Google and digital assistants including Amazon’s Alexa, Apple’s Siri and Google’s Assistant.

It is tempting to see Wikipedia in 2020 as the new top dog in the world of facts. The problem is that Wikipedia’s status is dependent almost entirely on Google, and the ways in which Wikipedia’s content is increasingly represented without credit by major platforms signals Wikipedia’s greatest existential threat to date.

Removing links back to Wikipedia as the source of answers to user questions prevents users from visiting Wikipedia to donate or volunteer. More important, however, are the ways in which unattributed facts violate the principle of verifiability on which Wikipedia was founded.

Within the bounds of Wikipedia, users are able to question whether statements are correctly attributed to reliable sources. They are able to contribute to discussions toward consensus and to recognize the traces that signal how unstable or stable statements of fact are. But when those statements are represented without attribution or links back to their messy political and social contexts, they appear as the objective, natural, and stable truth.

In 2020, there are new Goliaths in town in the form of the world’s most powerful technology companies, and Wikipedia must rearticulate its foundational principles and highlight its underdog status if it wishes to reinstitute itself as a bastion of justice on the internet.

Once the Underdog

The underdog is a common archetype of some of the most enduring narratives, from the world of sport to politics. Studying the appeal of underdogs over a number of years, Vandello, Goldschmied, and Michniewicz define underdogs as “disadvantaged parties facing advantaged opponents and unlikely to succeed.”[1] They write that there are underdog stories from cultures around the world: from the story of David and Goliath, in which the smaller David fights and kills the giant, Goliath, to the Monkey and the Turtle, a Philippine fable in which the patient turtle outwits the physically stronger and selfish monkey.

Underdogs are appealing because they offer an opportunity for redemption — a chance for the weaker individual or group to face a stronger opponent and to beat them, despite the odds leaning significantly against them. Usually, underdogs face off to better resourced competitors in a zero-sum game such as an election or sporting match, but underdogs don’t need to win to be appealing. As Vandello, Goldschmied, and Michniewicz state: they just have to face up to the bigger, more powerful, better resourced competitor in order to win the hearts of the public.

With the headline “Internet Encyclopaedias Go Head to Head” (see chapter 2), a Nature study represented such a competition when it was published in 2005.[2] The study pitted a four-year-old Wikipedia against the centuries-old Britannica by asking academic experts to compare forty-two articles relating to science. The verdict? The average science entry in Wikipedia contained around four inaccuracies to Britannica’s three, leading Nature to announce that “Jimmy Wales’ Wikipedia comes close to Britannica in terms of the accuracy of its science entries.”

The Nature study is now the stuff of legend. Although it was criticized for the way that articles were compared and the way that the study was reported, it is mostly used as evidence of the quality of Wikipedia in comparison with traditionally authored reference works.[3] For those of us working in the free and open source software and open content movement, it confirmed what we already thought we knew: that online resources like Wikipedia could attain the same (if not greater) level of quality that traditionally published resources enjoyed because they were open for the public to improve. It gave credence to the idea that content as well as software benefited from openness because, as Eric Raymond famously wrote, “with enough eyes, all bugs are shallow.”[4]

In 2005, Wikipedia was being developed on the back of volunteer labor, a handful of paid employees, and a tiny budget. In 2005, I was deep into my tenure as a digital commons activist. As the public lead for Creative Commons South Africa, the executive director of iCommons, and the advisory board of the Wikimedia Foundation, I was in the business of selling openness to the world. In photographs from 2005, I see myself smiling, surrounded by like-minded people from around the world who would meet at the annual iCommons Summit or Wikimania. We would talk about how copyleft was critical to a more innovative internet. For me, freedom and openness via copyleft licenses provided the opportunity for greater access to educational materials critical for countries like my own, burdened by extreme copyright regimes that benefited corporate publishing houses outside of South Africa at the expense of access to knowledge. I believed that open content and free and open source software was in keeping with the sharing of culture emblematic of ubuntu, the Zulu and Xhosa term for “humanity toward others” — the belief in a universal bond that connects people around the world.

Life as an “internet rights activist” wasn’t all glamorous. Back home in Johannesburg, it meant countless meetings with anyone who would listen. Talking to funders, academics, lawyers, musicians, publishers, and authors, we would present copyleft as an obvious choice for public knowledge, creativity, education, and creative industries to tiny audiences of skeptical or curious individuals. In my case, it meant tears of frustration when debating intellectual property lawyers about the virtues of the South African Constitutional Court’s finding in favor of the trademark dispute between a young T-shirt producer and a multinational beer company. And righteous indignation when hearing about underhanded attempts by large software corporations to stem the tide of open source to protect their hold on public education in Namibia.

I celebrated Wikipedia’s success because it was a signal from the establishment that openness was a force to be recognized. I celebrated because Wikipedia had become emblematic of the people of the internet’s struggle against Big Media. It signaled success against corporate media giants like the Motion Picture Association of America and its members who were railing aggressively against the ideology and practice of free and open source software and open content because it was considered a significant threat to their business models. In 2005, the peer-to-peer firms Napster, Grokster, and StreamCast had been successfully sued by rights holders, and Lawrence Lessig had lost his case to prevent the US Congress from extending US copyright terms. We all needed a hero, and we needed a few wins under our righteous belts.

When the Nature study was published in 2005, Wikipedia represented “the people of the internet” against an old (and sizeable) Big Media who railed against any change that would see them threatened. Ironically, the company behind the Encyclopædia Britannica was actually ailing when the Nature study drove the final nail into its coffin. But no matter: Britannica represented the old and Wikipedia the new. A year later, in 2006, Time Magazine’s Person of the Year reinforced this win. Awarding the Person of the Year to “You,” the editorial argued that ordinary people now controlled the means of producing information and media because they dissolved the power of the gatekeepers who had previously controlled the public’s access to information.

[The year 2006 is] a story about community and collaboration on a scale never seen before. It’s about the cosmic compendium of knowledge Wikipedia and the million-channel people’s network YouTube and the online metropolis MySpace. It’s about the many wresting power from the few and helping one another for nothing and how that will not only change the world, but also change the way the world changes.[5]

It is this symbolic value that makes underdogs so powerful. Vandello, Goldschmied, and Michniewicz argue that we root for underdogs not only because we want them to succeed but also because we feel “it is right and just for them to do so.” We dislike the fact that there is inequality in society — that some individuals or groups face a much more difficult task because they are underresourced. Rooting for the underdog enables us to reconcile or face this injustice (albeit from a distance).

Wikipedia Wars

With few resources and Big Media set against them, Wikipedia was once seen as the underdog to traditional media. As the bastion of openness against the selfishness of proprietary media, its fight was seen as a just one. This was fifteen years ago and now much is changed.

The encyclopedia that was pitted as Wikipedia’s competitor, Britannica, is now all but dead (the final print version was published in 2010). Wikipedia has moved from the thirty-seventh most visited website in the world when Nature published its study in 2005 to the top ten with many billions of page views a month.

Donations to Wikipedia’s host nonprofit, the Wikimedia Foundation, increased dramatically — from about $1.5 million in 2006 to almost $100 million in 2018. From St. Petersburg, Florida, with three employees to corporate headquarters in the heart of San Francisco, California, and a staff of over 250, the Wikimedia Foundation’s operating budget and cash reserves are so healthy that some have argued that Wikipedia doesn’t need your donations and that the increased budget is turning the foundation into a corporate behemoth that is unaccountable to its volunteers.[6]

If there is a political battle being fought — between politicians, policies, ideologies or identities — there will be a parallel conflict on Wikipedia. On English Wikipedia, for example, Donald Trump’s page is in a constant state of war. In 2018, an edit war ensued about whether to include information about Trump’s performance at the 2018 US-Russia summit in Helsinki.[7] On the Brexit article, editors have received death threats and dox attempts when editing information about the impact of Brexit on the United Kingdom and Europe.[8] After Time Magazine published a story by Aatish Tasser that was critical of Indian Prime Minister Narendra Modi, Tasser’s English Wikipedia page was vandalized, and screenshots of the vandalized page were distributed over social media as evidence.[9]

The above examples relate to obviously political subjects, but Wikipedia wars are being fought beyond the bounds of politicians’ biographies. Representation of current events on Wikipedia is almost always hotly contested. For almost every terrorist attack, natural disaster, or political protest, there will be attempts by competing groups to wrest control over the event narrative on Wikipedia to reflect their version of what happened, to whom it happened, and the reason why it happened. Unexpected events have consequences — for victims, perpetrators, and the governments who distribute resources as a result of such classifications. Wikipedia is therefore regularly the site of battles over what becomes recognized as the neutral point of view, the objective fact, the commonsense perspectives affecting the decisions that ultimately determine who the winners and losers are in the aftermath of an event.

Because of Wikipedia’s growing authority, governments now block the site to prevent it from being used to distribute what they deem to be subversive ideas. Wikipedia is currently blocked in China and Turkey, but countries including France, Iran, Pakistan, Russia, Thailand, Tunisia, the United Kingdom, and Venezuela have blocked specific content from a period of a few days to many years.

In 2013, it was found that Iran’s censorship of Persian Wikipedia targeted a wide breadth of political, social, religious, and sexual themes, including information related to the Iranian government’s human rights record and individuals who have challenged authorities.[10] In the United Kingdom, the Wikipedia article about “Virgin Killer,” an album by the German rock band Scorpions, was blacklisted for three days by the Internet Watch Foundation when the album cover image was classified as child pornography. In early 2019, all language editions of Wikipedia were blocked in Venezuela probably because of a Wikipedia article that listed newly appointed National Assembly president Juan Guaidó as “president number 51 of the Bolivarian Republic of Venezuela,” thus challenging Nicolás Maduro’ s presidency.[11]

How has representation on Wikipedia come to matter so much? The answer is that Wikipedia matters more in the context of the even more powerful third-party platforms that make use of its content than the way it represents subjects on its articles. What matters most is not so much how facts are represented on Wikipedia but how facts that originate on Wikipedia travel to other platforms.

Ask Google who the president of Uganda is or who won MasterChef Australia last year and the results will probably be sourced from (English) Wikipedia in a special “knowledge panel” featured on the right-hand side of the search results and in featured snippets at the top of organic search results. Ask Siri the same questions, and she will probably provide you with an answer that was originally extracted as data from Wikipedia.

Information in Wikipedia articles is being increasingly datafied and extracted by third parties to feed a new generation of question-and-answer machines. If one can control how Wikipedia defines and represents a person, place, event, or thing, then one can control how it is represented not only on Wikipedia but also on Google, Apple, Amazon, and other major platforms. This has not gone unnoticed by the many search engine optimizers, marketers, public relations, and political agents who send their representatives to do battle over facts on Wikipedia.

New Goliaths

From all appearances, then, Wikipedia is now the top dog in the world of facts. Look a little deeper into how Wikipedia arrived at this point and what role it is playing in the new web ecosystem, however, and the picture becomes a little muddier. The printed Britannica may be dead and Wikipedia may be the most popular encyclopedia, but Wikipedia is now more than just an encyclopedia, and there are new Goliaths on which Wikipedia is so dependent for its success that they could very easily wipe Wikipedia off the face of the internet.

Google has always prioritized Wikipedia entries in search results, and this is the primary way through which users have discovered Wikipedia content. But in 2012, Google announced a new project that would change how it organized search results. In a blog post entitled “Things, Not Strings,” the vice president of engineering for Google, Amit Singhal, wrote that Google was using Wikipedia and other public data sources to seed a Knowledge Graph that would provider “smarter search results” for users.[12] In addition to returning a list of possible results — including Wikipedia articles when a user searched for “Marie Curie,” for example — Google would present a “knowledge panel” on the right hand side of the page that would “summarize relevant content around that topic, including key facts you’re likely to need for that particular thing.”[13]

Soon after Google’s announcement, former head of research at the Wikimedia Foundation, Dario Taraborelli, started taking notice of how Google represented information from Wikipedia in its knowledge panels. One of the first iterations featured a prominent backlink to Wikipedia next to each of the facts under the opening paragraph. There was even reference to the Creative Commons Attribution ShareAlike license that Wikipedia content is licensed under. But, as the panels evolved, blue links to Wikipedia articles started shrinking in size. Over time, the underscore was removed so that the links weren’t clickable, and then the links were lightened to a barely visible grey tone. Now, facts under the opening paragraph tend not to be cited at all, and hyperlinked statements refer back to other Google pages.

Taraborelli was concerned at how dependent Wikipedia was on Google and at how changes being made to the way that Wikipedia content was being presented by the search giant could have a significant impact on the sustainability of Wikipedia. If users were being presented with information from Wikipedia without having to visit the site or without even knowing that Wikipedia was the true source, then that would surely affect the numbers of users visiting Wikipedia — as readers, editors, or contributors to the annual fund-raising campaign. These fears were confirmed by research conducted by McMahon et al[CE2] who found that facts in the knowledge panels were being predominantly sourced from Wikipedia but that these were “almost never cited” and that this was leading to a significant reduction in traffic to Wikipedia.[14]

Taraborelli was also concerned with a more fundamental principle at issue here: that Google’s use of Wikipedia information without credit “undermines people’s ability to verify information and, ultimately, to develop well-informed opinions.”[15] Verifiability is one of Wikipedia’s core content policies. It is defined as the ability for “readers [to be] able to check that any of the information within Wikipedia articles is not just made up.”[16]

For editors, verifiability means that “all material must be attributable to reliable, published sources.”[17] Wikipedia’s verifiability policy, in other words, establishes rights for readers and responsibilities for editors. Readers should have the right to be able to check whether information from Wikipedia is accurately represented by the reliable source from which it originates. Editors should ensure that all information is attributable to reliable sources and that information that is likely to be challenged should be attributed using in-text citations.

It is easy to see Wikipedia as a victim of Google’s folly here. The problem is that a project within the Wikimedia stable, Wikidata, has done exactly the same thing — as Andreas Kolbe pointed out in response to the Washington Post story about Google’s knowledge boxes.[18] As Wikidata’s founder, Denny Vrandečić, describes in chapter 12, the project was launched to help efforts just like Google’s to better represent Wikipedia’s facts by serving as a central storage of structured data for Wikimedia projects. Yet, Wikidata has been populated by millions of statements that are either uncredited to a reliable source or attributed to the entire Wikipedia language version from where they were extracted. The latter does not meet the requirements for verifiability, one of Wikipedia’s foundational principles, because it does not enable downstream users the ability to verify or check whether the statements are, indeed, reflective of their source or whether the source itself is reliable.

A number of Wikipedians have voiced concern over Wikidata’s apparent unconcern with the need for accurate source information for its millions of claims. Andreas Kolbe has contributed multiple articles about the problems with Wikidata. He wrote an op-ed about Wikidata in December 2015 as a counterpoint to the celebratory piece that had been published about the project the month prior.[19]

Kolbe made three observations about the quality of content on Wikidata. The first was the problem of unreferenced or underreferenced claims (more than half of the claims at that time were unreferenced). Second was the fact that Wikidata was extracting facts from Wikipedia and then presenting them under a more permissible copyright license than that of Wikipedia, giving the green light to third-party users like Google to use that content unattributed. And third was that there were problems with the quality of information on Wikidata because of its lack of stringent quality controls.

Kolbe noted a list of “Hoaxes long extinguished on Wikipedia live on, zombie-like, in Wikidata.”[20] Wikidata represents a strategic opportunity for search engine optimization specialists and public relations professionals to influence search results. Without stringent quality control mechanisms, however, inaccurate information could be replicated and mirrored on more authoritative platforms which would multiply their detrimental effects.

In the past few years, the list of major platforms making use of Wikipedia information (either directly or via Wikidata) has grown. Now the most important reusers are digital assistants in the form of Amazon’s Alexa, Apple’s Siri, and Google’s Assistant, who answer user questions authoritatively using Wikipedia information. The loss of citations and links back to Wikipedia have grown alongside them as problems of citation loss with Google and Wikidata have been replicated.

The problem, then, is about the process of automated extraction and the logics of knowledge bases more generally than it is about the particular practices by specific companies or organizations. In 2015 and 2016, I wrote a series of articles about this problem with Mark Graham from the Oxford Internet Institute while I was a PhD student there. We argued that the process of automation in the context of the knowledge base had both practical and ethical implications for internet users.[21]

From a practical perspective, we noted that information became less nuanced and its provenance or source obscured. The ethical case involved the loss of agency by users to contest information when that information is transported to third parties like Google. When incorrect information is not linked back to Wikipedia, users are only able to click on a link. There are no clear policies on how information can be changed or who is accountable for that information.

In one case, a journalist whose information was incorrectly appearing in the knowledge panel was informed by Google to submit feedback from multiple internet protocol (IP) addresses — every three or four days, multiple times, using different logins and to “get more people to help you submit feedback.”[22] This does not constitute a policy on rectifying false information. Compare Wikipedia’s editorial system with its transparent (albeit multitudinous) policies, and one realizes how the datafication of Wikipedia content has removed important rights from internet users.

The Right to Verifiability

Wikipedia was once celebrated because it was seen as the underdog to Big Media. As Wikipedia has become increasingly powerful as a strategic resource for the production of knowledge about the world, battles over representation of its statements have intensified. Wikipedia is strategic today, not only because of how people, places, events, and things are represented in its articles, but also because of the ways in which those articles have become fodder for search engines and digital assistants. From its early prioritization in search results, Wikipedia’s facts are now increasingly extracted without credit by artificial intelligence processes that consume its knowledge and present it as objective fact.

As one of most popular websites in the world, it is tempting in 2020 to see Wikipedia as a top dog in the world of facts, but the consumption of Wikipedia’s knowledge without credit introduces Wikipedia’s greatest existential threat to date. This is not just because of the ways in which third-party actors appropriate Wikipedia content and remove the links that might sustain the community in terms of contributions of donations and volunteer time. More important is that unsourced Wikipedia content threatens the principle of verifiability, one of the fundamental principles on which Wikipedia was built.

Verifiability sets up a series of rights and obligations by readers and editors of Wikipedia to knowledge whose political and social status is transparent. By removing direct links to the Wikipedia article where statements originate from, search engines and digital assistants are removing the clues that readers could use to (a) evaluate the veracity of claims and (b) take active steps to change that information through consensus if they feel that it is false. Without the source of factual statements being attributed to Wikipedia, users will see those facts as solid, incontrovertible truth, when in reality they may have been extracted during a process of consensus building or at the moment in which the article was vandalized.

Until now, platform companies have been asked to contribute to the Wikimedia Foundation’s annual fund-raising campaign to “give back” to what they are taking out of the commons.[23] But contributions of cash will not solve what amounts to Wikipedia’s greatest existential threat to date. What is needed is a public campaign to reinstate the principle of verifiability in the content that is extracted from Wikipedia by platform companies. Users need to be able to understand (a) exactly where facts originate, (b) how stable or unstable those statements are, © how they might become involved in improving the quality of that information, and (d) the rules under which decisions about representation will be made.

Wikipedia was once recognized as the underdog not only because it was underresourced but also, more importantly, because it represented the just fight against more powerful media who sought to limit the possibilities of people around the world to build knowledge products together. Today, the fight is a new one, and Wikipedia must adapt in order to survive.

Sitting back and allowing platform companies to ingest Wikipedia’s knowledge and represent it as the incontrovertible truth rather than the messy and variable truths it actually depicts is an injustice. It is an injustice not only for Wikipedians but also for people around the world who use the resource — either directly on Wikimedia servers or indirectly via other platforms like search.

Citation: Heather Ford and Mark Graham, “Rise of the Underdog,” in Wikipedia @ 20, ed. Joseph Reagle and Jackie Koerner (Cambridge, USA: MIT Press: 2020).

Notes

[1] Joseph A. Vandello, Nadav Goldschmied, and Kenneth Michniewicz, “Underdogs as Heroes,” in Handbook of Heroism and Heroic Leadership, ed. Scott T. Allison, George R. Goethals, and Roderick M. Kramer (New York: Routledge, 2017), 339–355.

[2] Jim Giles, “Internet Encyclopaedias Go Head to Head,” Nature 438 (December 15, 2005): 900–901.

[3] Nature Online, “Encyclopaedia Britannica and Nature: A Response,” press release, March 23, 2006; Andrew Orlowski, “Wikipedia Science 31% More Cronky than Britannica’s,” The Register, December 16, 2005, https://www.theregister.co.uk/2005/12/16/wikipedia_britannica_science_comparison.

[4] Eric S. Raymond, The Cathedral and the Bazaar: Musings on Linux and Open Source by an Accidental Revolutionary (Sebastopol, CA: O’Reilly Media, 2011).

[5] Lev Grossman, “You — Yes, You — Are TIME’s Person of the Year,” Time Magazine, December 25, 2006, http://content.time.com/time/magazine/article/0,9171,1570810,00.html.

[6] Caitlin Dewey, “Wikipedia Has a Ton of Money. So Why Is It Begging You to Donate Yours?” Washington Post, December 2, 2015, https://www.washingtonpost.com/news/the-intersect/wp/2015/12/02/wikipedia-has-a-ton-of-money-so-why-is-it-begging-you-to-donate-yours/; Andrew Orlowski, “Will Wikipedia Honour Jimbo’s Promise to STOP Chugging?” The Register, December 16, 2016, https://www.theregister.co.uk/2016/12/16/jimmy_wales_wikipedia_fundraising_promise/.

[7] Aaron Mak, “Inside the Brutal, Petty War Over Donald Trump’s Wikipedia Page,” Slate, May 28, 2019, https://slate.com/technology/2019/05/donald-trump-wikipedia-page.html.

[8] Matt Reynolds, “A Bitter Turf War Is Raging on the Brexit Wikipedia Page,” Wired UK, April 29, 2019, https://www.wired.co.uk/article/brexit-wikipedia-page-battles.

[9] Aria Thaker, “Indian Election Battles Are Being Fought on Wikipedia, Too,” Quartz India, May 16, 2019, https://qz.com/india/1620023/aatish-taseers-wikipedia-page-isnt-the-only-target-of-modi-fans/.

[10] Nima Nazeri and Collin Anderson, Citation Filtered: Iran’s Censorship of Wikipedia (University of Pennsylvania Scholarly Commons, November 2013), https://repository.upenn.edu/iranmediaprogram/10/.

[11] NetBlocks, “Wikipedia Blocked in Venezuela as Internet Controls Tighten,” NetBlocks, January 28, 2019, https://netblocks.org/reports/wikipedia-blocked-in-venezuela-as-internet-controls-tighten-XaAwR08M.

[12] Amit Singhal, “Introducing the Knowledge Graph: Things, Not Strings,” The Keyword, May 16, 2012, https://www.blog.google/products/search/introducing-knowledge-graph-things-not/.

[13] Singhal, “Introducing the Knowledge Graph.”

[14] Nicholas Vincent, Isaac Johnson, and Brent Hecht, “Examining Wikipedia With a Broader Lens,” in CHI ’18: Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems, ed. R. Mandryk and M. Hancock (New York: ACM, 2018), https://doi.org/10.1145/3173574.3174140.

[15] Quoted in Caitlin Dewey, “You Probably Haven’t Even Noticed Google’s Sketchy Quest to Control the World’s Knowledge,” The Washington Post, May 11, 2016, https://www.washingtonpost.com/news/the-intersect/wp/2016/05/11/you-probably-havent-even-noticed-googles-sketchy-quest-to-control-the-worlds-knowledge/.

[16] Wikipedia, s.v. “Wikipedia: Verifiability,” last modified January 13, 2010, https://en.wikipedia.org/wiki/Wikipedia:Verifiability.

[17] Wikipedia, s.v. “Wikipedia: Verifiability.”

[18] “In the Media,” Wikimedia Signpost, May 28, 2016, https://en.wikipedia.org/wiki/Wikipedia:Wikipedia_Signpost/2016-05-17/In_the_media.

[19] Andreas Kolbe, “Whither Wikidata?” The Signpost, December 2, 2015, https://en.wikipedia.org/wiki/Wikipedia:Wikipedia_Signpost/2015-12-02/Op-ed.

[20] Kolbe, “Whither Wikidata?”

[21] Heather Ford and Mark Graham, “Provenance, Power and Place: Linked Data and Opaque Digital Geographies,” Environment and Planning D: Society and Space 34, no. 6(2016): 957–970, https://doi.org/10.1177/0263775816668857; Heather Ford and Mark Graham, “Semantic Cities: Coded Geopolitics and the Rise of the Semantic Web,” in Code and the City, ed. Rob Kitchin and Sung-Yueh Perng (Oxford, UK: Routledge, 2015).

[22] Rachel Abrams, “Google Thinks I’m Dead,” The New York Times, December 16, 2017, https://www.nytimes.com/2017/12/16/business/google-thinks-im-dead.html.

[23] Brian Heater, “Are Corporations that Use Wikipedia Giving Back?” TechCrunch, March 24, 2018, https://techcrunch.com/2018/03/24/are-corporations-that-use-wikipedia-giving-back/.